Lede: What happened, who, when and why it matters

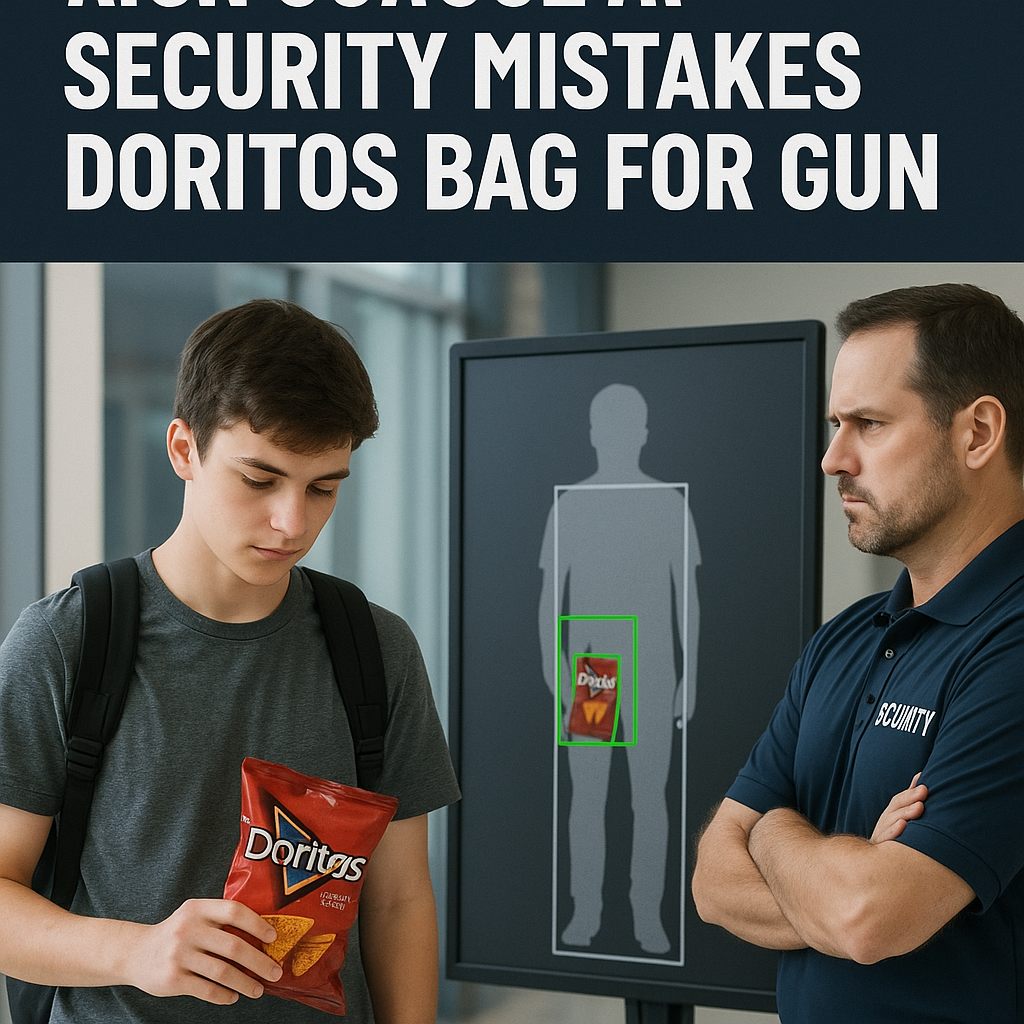

This week, at a Northern California high school, an on-campus AI-powered security camera generated an alert identifying a Doritos chip bag as a possible firearm. The incident, reported to school administrators in October 2025, forced a temporary lockdown of a hallway and prompted the district to audit automated alert thresholds. The misidentification has reignited debate over false positives, algorithmic bias and the growing use of AI security in K-12 environments.

How modern AI security systems work and where they fail

Many schools have moved from analog cameras to AI-enhanced systems that use object-detection models to classify items in real time. These models scan live feeds and flag objects that match training-data patterns for immediate review. Vendors typically tune systems to prioritize sensitivity so potential threats are not missed, but higher sensitivity increases false positive rates. In this case, the textured, multicolored surface of a Doritos bag visually matched the model’s learned features for a firearm, triggering an alert that required human verification.

False positives and operational costs

False positives create real operational burdens. Each automated alert may require security staff to divert resources, pause classroom activities, or enact lockdown protocols. Administrators say that even a single false alarm can erode trust in the system and desensitize staff to genuine threats, a phenomenon security professionals call “alert fatigue.” School districts must weigh the trade-off between preventing rare but catastrophic events and the recurring disruption caused by incorrect classifications.

Privacy, bias and legal questions

Beyond operational disruption, the episode raises policy questions about who reviews alerts, how long footage is stored, and what transparency frameworks are in place. Civil liberties groups have repeatedly warned that opaque AI surveillance increases the risk of biased outcomes and privacy intrusion. When AI models are trained on limited or unrepresentative data sets, they can produce skewed performance in real-world school environments, especially in diverse student populations and cluttered hallways where common objects can confuse detectors.

District response and vendor responsibilities

The school district temporarily adjusted alert sensitivity and initiated a review of vendor logs and training data. Vendors supplying AI security services typically include configurable thresholds and human-in-the-loop review workflows; however, the effectiveness of those safeguards depends on proper configuration and staff training. Best practice guidance from industry groups recommends routine calibration, documented escalation procedures and independent audits to keep false positive rates manageable.

Context: why this matters now

Interest in AI security systems for schools spiked after high-profile campus attacks in the last decade. Budget-conscious districts have invested in automated detection to augment limited security staffing. But as adoption rises, so do concerns about reliability and unintended consequences. An overreliance on imperfect models can shift responsibility away from human judgment without improving safety in a meaningful way.

Analysis: trade-offs and practical steps

Practical steps districts can take include lowering sensitivity thresholds for non-critical areas, creating rapid verification channels to minimize classroom disruptions, and requiring vendors to provide explainability reports that show why a given alert was triggered. Independent third-party testing of detection models against realistic school scenarios can surface failure modes—such as snack packaging resembling other objects—before deployment.

Expert insights and the outlook

Privacy and safety specialists emphasize that technology should support, not replace, trained human responders. Schools considering AI security need clear policies that define alert review timelines, data retention limits, and redress mechanisms for students affected by false alarms. As districts balance safety imperatives with civil liberties, expect more public scrutiny, calls for transparency, and requests for independent audits of AI models used in educational settings. Long-term, lawmakers and school boards are likely to push for stricter procurement standards and testing requirements so that misidentifications like the Doritos-bag incident become less common and less disruptive.